In light of the fact that Peter Higgs and Francois Englert have just won the Nobel Prize for Physics for their work in proving the existence of the Higgs boson particle, I thought I’d share with you one of my favourite features that I’ve ever written. Shortly after the Higgs was discovered, Linux Format asked me to write a piece about the computing systems at CERN, the Large Hadron Collider and the universities around the world that lent their number crunching equipment to the task. I met some very clever people as part of my research, and this is what I wrote.

On a typical day, Dr Fabrizio Salvatore general routine goes something like this: coffee and a short briefing for two PhD candidates who work within his department at the University of Sussex, a leafy campus next door to the new football ground in Brighton. After that there’s a similar, slightly longer guidance session with a couple of post-grad researchers, and then it’s on to his other teaching duties.

So far, so typically academic.

When he gets time for his own research, Salvatore will begin by loading a program called ROOT onto his Ubuntu-powered laptop. ROOT is the go-to software framework for High Energy Physics (HEP) and particle analysis. Once a week he’ll dial into a conference call or video chat to talk with collaborators on research projects from around the world.

On a really good day – perhaps once in a lifetime – he’ll get to sign a paper that finds near certain evidence of the existence of the Higgs boson, the so-called ‘God particle’ which gives all other particles mass and thus makes reality what it is.

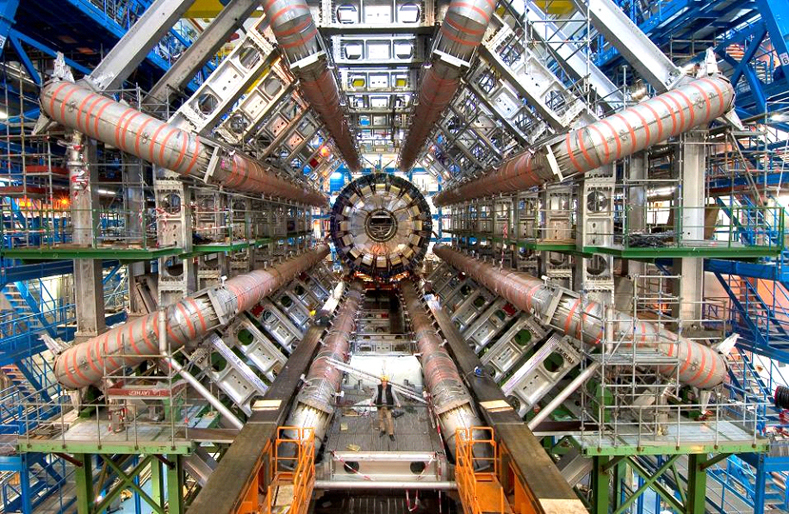

Dr Salvatore, you see, is one of the 3,300 scientists who work on ATLAS, a project which encompasses the design, operation and analysis of data from the Large Hadron Collider (LHC) at the world famous Swiss laboratory, CERN.

“The first time I went to CERN, in 1994,” says Salvatore, “It was the first time that I had been abroad. My experience there – just one month – convinced me that I wanted to work in something that was related it. I knew I wanted to do a PhD in particle physics, and I knew I wanted to do it there.”

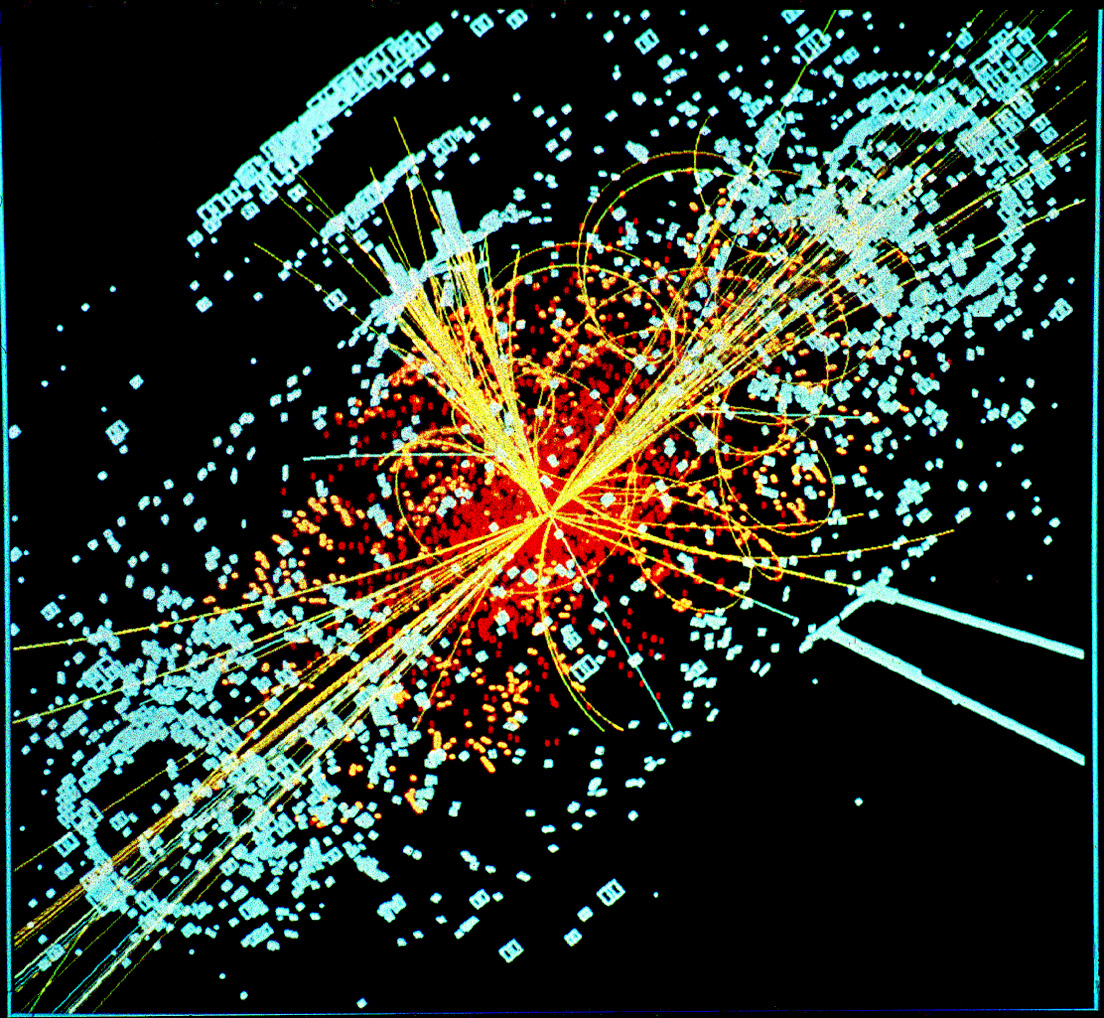

In June this year, scientists from ATLAS and their colleagues from another CERN experiment, CMS, announced that they had found probable evidence of the Higgs Boson, a important sub-atomic particle whose existence has been theorised for half a century but has never been observed. The headlines were carried around the world via Twitter, where it was hailed by no less than the sexy boy of science Professor Brian Cox as “one of the most important scientific discoveries of all time”.

And – putting aside the small matter of building the LHC itself – finding the Higgs was almost entirely done with Linux. Indeed, many of the scientists we’ve spoken to say it couldn’t have been done without it.

The level of public interest in CERN’s work isn’t surprising. Trying to describe what goes on there is like all the best bits of Dr Who, Ghostbusters, Star Trek and the work of Douglas Adams come to life – unsurprising as it was the work of CERN that’s inspired many of these sci-fi staples. It’s impossible not to get excited by talk of particle accelerators, quantum mechanics and recreating what the universe looked like at the beginning of time, even if you have no hope of ever understanding what concepts like super-symmetry and elementary particles really mean.

Inside LHC, protons are fired towards each other at velocities approaching the speed of light. They fly around 17 miles of indoor racetrack underneath the Franco-Swiss Alps, and the sub-atomic debris of their collisions are recorded by one or more of the seven detector sites dotted around the circumference of the ring, of which ATLAS and CMS are just two.

The incredible physics that make LHC’s particle beam possible may be what’s captured the public imagination. But smashing protons together is just the start of the work, it’s what happens afterwards that requires one of the biggest open source computing projects in the world right now.

LHC is big data

Experiments, or ‘events’, within LHC produce a lot of information. Even after discarding some around 90% of the data captured by its sensors, original estimates reckoned that LHC would requrie storage for around 15 petabytes of data a year. In 2011, LHC generated around 23 petabytes of data for analysis, and that figure is expected to rise to around 30PB for 2012, or double the original data budget. This winter, the accelerator will be closed down for 20 months for repairs and an upgrade which will result in even more data being recorded at the experiment sites. These figures are just what’s being produced, of course: when physicists run tests against results they don’t just work with the most recent data – an event within the collider is never taken in solus, but always looked at as part of the whole.

Gathering data from the LHC, distributing it and testing it is monumental task.

“The biggest challenge for us in computing,” explains Ian Bird, Project Lead for the Worldwide LHC Computing Grid (WLCG), “Is how we’re going to find the resources that experiments will be asking for in the future, because their data requirements and processing requirements are going to go up, and the economic climate is such that we’re not going to get a huge increase in funding.”

To this into some context, though. Google processes something in the region of 25PB every day. What Google isn’t doing, however, is analysing every pixel in every letter of every word it archives for the tell-tale signature of an unobserved fundamental particle.

“What we do is not really like what other people do,” says Bird, “With video downloads, say, you have a large amount of data but most people probably only watch the top ten files or so, so you can cache those files all over the place to speed things up. Our problem is different in that we have huge data sets, and the physicist wants the whole data set. They don’t want the top four gigabytes of a particular set, they want the whole 2.5PB – and so do a thousand other researchers. You can’t just use commercial content distribution networks to solve that problem.”

Paolo Calafiura is the Chief Architect of the ATLAS experiment software, and has been working on the project since 2001. Perceptions of its scale have changed a lot in that time.

“We used to be at the forefront of ‘big data’,” Calafiura says, “When I first said we would buy 10PB of data a year, jaws would drop. These days, Google or Facebook will do that in any of their data centres without breaking a sweat.

“In science we are still the big data people though,” he adds.

A coder by trade, Califiura has a long background in physics work. Prior to ATLAS, he helped to write the GAUDI Framework, which underpins most High Energy Physics (HEP) applications, particlarly those at CERN. The GAUDI vision was to create a common platform for physics work in order to facilitate collaborations between scientists around the world.

Prior to GAUDI, Califiura explains, most software for analysis was written on a generally ad hoc basis using FORTRAN commands. By transitioning to a common object oriented framework for data collection, simulation and analysis using C++, the team Calafiura worked for laid the foundations for the huge global collaborations that underpin CERN’s work.

“The GAUDI framework is definitely multiplatform,” says Califiura, “In the beginning ATLAS software was supported on a number of Unix platforms and GAUDI was – and is still – supported on Windows. Sometime around 2005 we switched off a Solaris build [due to lack of interest], and before that a large chunk of the hardware was running HPUX. But the servers moved to Linux and everyone was very happy.

“Right now,” Califiura continues, “We’re a pure Linux shop from the point of view of real computing and real software development. There’s a growing band of people pushing for MacOS to be supported, but it’s done on a best effort basis.”

According to Califiura, Apple notebooks are an increasingly common sight among delegates at HEP conferences, but it’s very rare to spot a Start orb over someone’s shoulder.

“With my architect heart,” he admits, “I’m not happy that we’re pure Linux, because it’s easier to see issues if you’re multiplatform. But being purely Linux allows us to cut some corners, for example we were having a discussion about using a non-POSIX feature of Linux called Splice, which is a pipe where you don’t copy data and increases the efficiency of our computing.”

Open source collaboration

Around about 10,000 physicists around the world work on CERN-related projects, two thirds of whom are attached to the largest experiments, ATLAS and CMS. Analysing the data produced by LHC is a challenge on multiple levels. Getting results out to scientists involves massive data transfers which must be done in the most efficient way possible. Then there’s the question of processing that data – running analysis on several petabytes of data is going to need a little more power than the average laptop unless you want to wait months for calculations to come back. Reliability, too, is key – if a server crashes halfway through even a 48 hour job, restarting it costs precious time and resources.

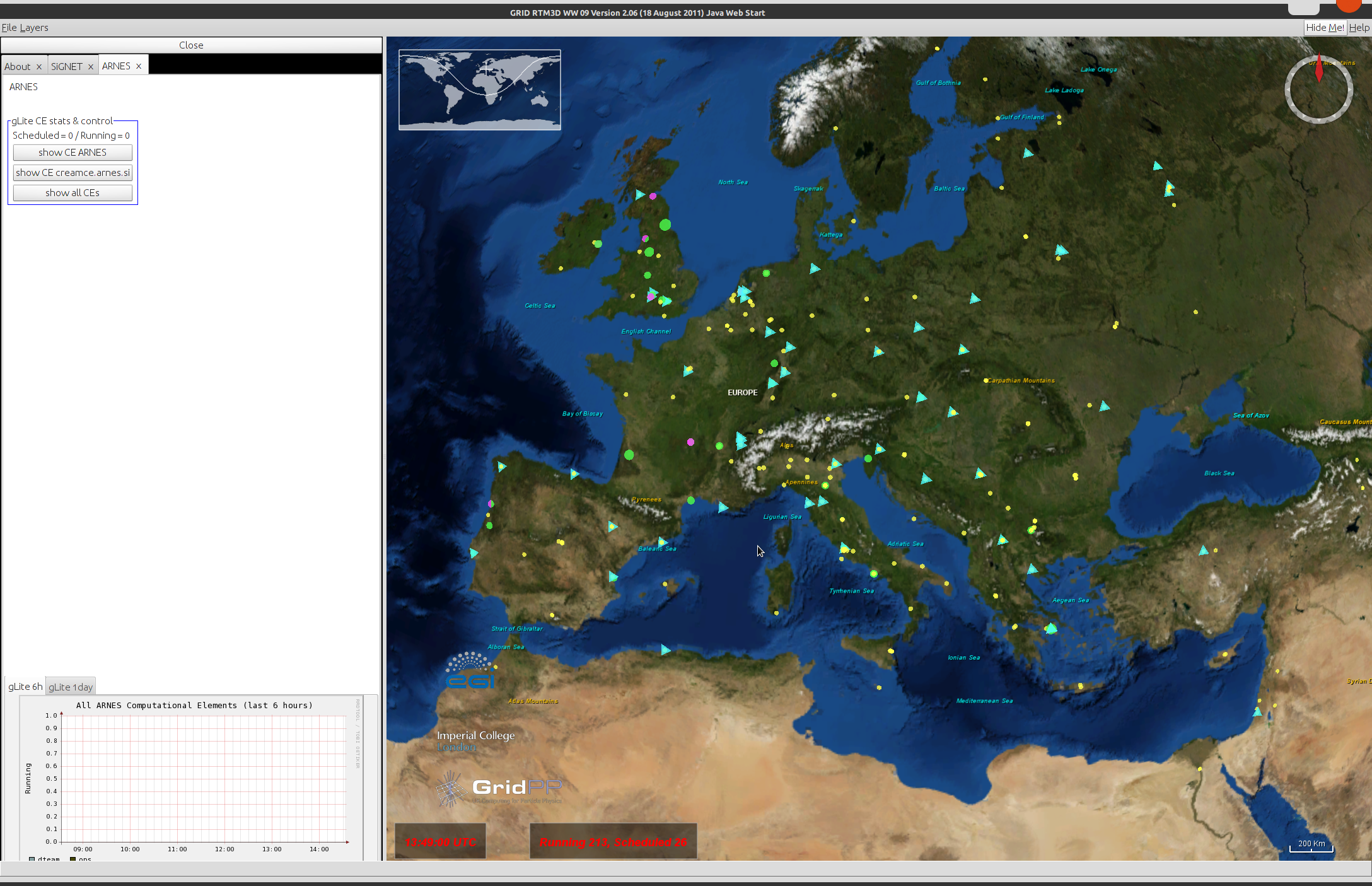

The compute and storage side of CERN’s work is underpinned by a massive distributed computing project, in one of the world’s most widely dispersed and powerful grids. Exact terminologies and implementations of the WLCG vary between the 36 countries and 156 institutions involved in the project, but essentially it’s a tiered network of access and resources that works more or less like this.

At the centre of the network is CERN, or Tier 0 (T0), which has somewhere over 28,000 logical CPUs to offer the grid. T0, naturally, is where the raw experimental data is generated. Connected to T0 – often by 10Gbps fibre links – are the Tier 1 (T1) sites, which are usually based major national HEP laboratories which act as local hubs within a country. In most cases, the T1s will mirror all the same raw data produced at CERN.

Beneath the T1 sites are the Tier 2 (T2) data centres. As a rule these will be attached to major universities with the resources to spare space, racks and at least one dedicated member of staff to maintain them. Links between T1 and T2 sites are generally via the national academic network backbones (JANET in the UK), so are high speed but shared with other traffic. Not all the research data will be stored locally, rather subsets that are of particular interest to a certain institution will be permanently stored while other data will be fetched from the T1s as required.

And finally, there’s the Tier 3 (T3) institutions, which tend to be the smaller universities and research centres that don’t require dedicated local resources for their work, but will download data as required from the T2 network and submit jobs for processing on the grid.

There are several online dashboards for WLCG, so anyone can go in and visualise the current state of the network. Here’s one, and another, and another. In total, there are nearly 90,000 physical CPUs attached to WLCG at the present time, offering 345,893 logical cores. Total grid storage capacity is over 300PB.

In the UK, the national WLCG project is known as GridPP, and it’s managed out of the University of Oxford by Pete Gronbach. According to the network map there are over 37,000 logical CPU cores attached to GridPP, which act as a single resource for scientists running CERN-based analytics.

We have constant monitoring of the system to make sure all our services are working properly,” Gronbach explains, “If you fail any of the tests it goes to a dash board and there are monitors on duty who look at these things and generate trouble tickets for the sites. There’s a memorandum of understanding that the site will operate at certain service levels in order to be part of the grid, so they have to have a fix within a certain time. It’s quite professional really, which you may not expect from a university environment. But this is not something we’re playing at.”

One of the requirements for a T2 site is to have a full time member of staff dedicated to maintaining the Grid resources, and weekly meetings are held via video or audio conference bridges between system administrators through the WLCG to ensure everything is running correctly and updates are being applied in a timely fashion throughout the grid.

Crucially, using Linux allows HEP centres to keep costs down, since they can use more or less entirely generic components in all the processing and storage networks. This is important, because CERN is a huge public investment of over a billion Euros a year, so it has to deliver value for money. It also means that CERN can sponsor open source software like Disk Pool Manager (DPM), which is used for looking after storage clusters. As you might expect from the organisation which gave humanity the world wide web, it’s very much aware of the mutual benefits of shared development.

“GridPP has certainly evolved over the last 10 years,” says Gronbach, “But a lot of the batch systems have remained the same – we use TORQUE and MAUI which is based on PBS. One or two sites use Grid Engine, but there’s less support for that. They’re a bit on their own with it. Things like that have remained fairly constant through the years.

Other bits of the software, like the Computing Element, which is the bit that sits between users jobs coming in and sending them to our batch system, have gone through many generations and we update it every six months or so to introduce new features.

According to Paolo Califiura, multicore processing has been the single most important improvement to the grid in recent years.

“With multicore,” he says, “We’ve been able to use a very Linux specific trick – which is fork, copy and write – to basically run eight or 16 copies of the same application by forking it from the mother process and save a factor of two in memory by doing it.”

A major upgrade is currently taking in place across GridPP to install Scientific Linux 6 (SL6) and the latest version of Lustre for storage file systems. Scientific Linux is co-maintained by CERN and the US laboratory, Fermilab. At the time of writing, SL6 is running on some 99,365 different machines. This number is slightly lower than the norm, probably because it’s the middle of the summer vacation in most northern hemisphere countries where the vast majority of CERN-related work is carried out.

SL6 itself is a spin of Red Hat Enterprise Linux, chosen for its rock solid stability. Not all researchers choose to use Scientific Linux, of course, and all the tools vital for CERN work are compatible with other distros – anecdotally Ubuntu is still a popular choice. But all servers connected to the WLCG do run a SL5 or above.

“What we’ve all got good at over the last 10 years is automating installations and administrating these systems,” Gronbach continues, “So we can install systems using PXE Boot, Kickstart, CF Engine or Puppet to reinstall a node quickly and remotely, because the computer centres aren’t always at the university either.”

Keeping hardware generic and using well established FOSS also makes it easy for more centres to come online and offer resources up to the grid. The University of Sussex, for example, became a fully fledged T2 centre in June this year. Sussex been affiliated to the ATLAS project since 2009, when Salvatore and colleague Dr Antolella De Santo joined from Royal Holloway – another T2 – and established an ATLAS group here. Thanks to their work, Sussex has won funding which has enabled Salvatore, De Santo and the university’s IT department to add a 12 rack datacentre in a very modern, naturally cooled unit with 100 CPUs and around 150TB of storage. The cost was just £80,000.

All this is arguably the most trivial part of the software maintenance, however. Where things get really complex, the virtues of open source really come into their own.

Delving in to the details

Beyond the mechanics of batch control for thousands of jobs submitted each day to the WLCG HEP, the process of carrying out the science on experimental data also requires an open source approach. For example, all of the collaborators within the experiment, whether they’re physicists or one of the hundreds of professional developers employed within the project, have a vested interest in and a responsibility to make sure the software is constantly improving in its ability to spot significant events in the noise of a billion particles being tossed around.

It’s not impossible to be a physicist without an understand of of C++ and how to optimise code through the compiler, but it is a taught class as part of an undergraduate degree at Sussex and other HEP institutions throughout the country. The simple fact is that researchers have to be able to make the tools as they go along.

“Our community has traditionally been open source since before it was really called open source,” says Ian Bird, “We use commercial software where we need to and where we can, but all of our own data analysis programs are all written in house because there’s nothing that exists to do that job.”

The sheer size and complexity of ATLAS and CMS means that there are a hundreds of software professionals employed to create and maintain code, but since many sites and teams will collaborate on different parts of the experiment, tools like SVN are vital for managing the number of contributions to each algorithm.

“You wouldn’t be able to it on a different operating system,” Dr Salvatore says, “It’s becoming easier and easier to work with tools like SVN from within the opertating system itself, and this is definitely one f the things you exploit as much as possible, that you can go as close as possible to the operating system while with proprietary systems the OS is hidden by several layers.”

Managing the input thousands of highly intelligent HEP academics is a challenge which calls for more than mere version control, though.

“There’s a bit of sociology, too.” says Paolo Calafiura, “In an experiment with over 3,000 collaborators like ATLAS, there’s quite a bit of formality to decide who is a member of ATLAS or not and who gets to sign the Higgs paper. So the way it works is that there’s a system of credits, which says I’ve done work for the community so I get to sign the paper, Traditionally, this work is either in the detector or contributing to the software. If someone has a good algorithm for tracking a particle’s path through the detector, for example, they spend a couple of years perfecting that algorithm and contributes it to the common software repository and becomes part of the official instructions.

“Up until 2010 the majority of contribution to the software,” says Califiura, “Came from physicists, except for the core area where professionals work. Then everything changed the moment real data started flowing in. I the last couple of years, physicists are using tools prepared by a much smaller community. I’ve been involved with High Enery Physics for most of my life, and understandably every time an experiment starts producing data the vast majority of the community loses interest in the technical aspects because they want to – for example – start looking to find the Higgs.”

The process of finding the Higgs isn’t as simple as parsing a load of numbers generated by the magnetic field detectors in LHC. For a large number of cases, research starts with physicists working from a theoretical basis to calculate what the results of an event in which a Higgs boson appears would look like.

Once a scientist or research group has come up with a new and probable scenario, they’ll attempt to simulate it using Monte Carlo, a piece of grid-enabled software which attempts to predict what will happen when protons smash together. The results of these simulations are then compared to a database of real results to find those which bear further investigation.

The event which is suspected to show a Higgs particle released comes from a dataset of around one quadrillion collisions.

There are groups of scientists and software developers attached to each part of the ‘core’ computing resource (see Accelerator Generator) which govern the behaviour of the LHC and the basic pruning of data. These groups meet weekly to discuss new ideas and the smooth running of existing processes, just like the grid maintainers.

The future

With LHC due to go offline at the end of the year for an upgrade [Note – LHC was closed down in February this year so that this work could commence], there has obviously been a lot of conversations within the upper echelons of the computing project as to where WLCG goes next in order to keep up with the increased requirements of higher energy experiments.

“When we started the whole exercise,” says Ian Bird, “We were worried about networking, and whether or not we would get sufficient bandwidth and whether it would be reliable enough. And all of that has been fantastic and exceeded expectations. So the question today is how can we use the network better? We’re looking at more intelligent ways of using the network, caching and doing data transfer when the job needs it rather than trying to anticipate where it will be needed.”

Inevitably, the subject of cloud computing crops up. Something like EC2 is impractical as a day to day resource, for example, because of the high cost of transferring data in and out of Amazon’s server farms. It might be useful, however, to provision extra computing in the run up to major conferences where there is always a spike in demand as researchers work to finish papers and verify results. As most grid clusters aim for 90% utilisation under normal circumstances, there’s not a lot of spare cycles to call into action during these times.

“There’s definitely a problem with using commercial clouds which would be more expensive than we can do it ourselves,” says Bird, “On the other hand, you have technologies like OpenStack which are extremely interesting. We’re deploying an OpenStack pilot cluster now to allow us to offer different kinds of services and gives us a different way to connect data centres together – this is a way off, but we do try to keep up with technology and make sure we’re not stuck in a backwater doing something no-one else is doing.”

Similarly, GPGPU is a relatively hot topic among ATLAS designers.

“Some academics,, in Glasgow for example, are looking at porting their code to GPGPU,” says GridPP’s Gronbach, “But the question is whether the type of analysis they do is well suited to that type of processing. Most of our code is what’s known as ’embarrassingly parallel’, we can chop up the data and events into chunks and send each one to a different CPU and it doesn’t have to know what’s going on with other jobs. But it’s not a parallel job in itself, it doesn’t map well to GPU type processing.”

There’s also, says Bird, a lot of interest in Intel’s forthcoming Larabee/Xeon Phi hardware.

While there’s much debate about how the world’s largest distributed computing grid develops, then, what is certain is that CERN’s work is going to carry on becoming more demanding every year. Confirming the existence of the Higgs Boson and its purpose is neither complete nor the only work of the Large Hadron Collider. This is just the first major announcement of – hopefully – many to come.

So next time you look up at the stars and philosophically ponder about how it all began, remember that recent improvements to our levels of certainty about life, the universe and everything are courtesy of thousands of scientists, and one unlikely penguin.

[vc_tabs interval=”0″ width=”1/1″ el_position=”first last”] [vc_tab title=”What is Higgs anyway?”] [vc_column_text width=”1/1″ el_position=”first last”]

We all got very excited when ATLAS and CMS announced in heavily caveated form that they’d found a previously unobserved particle that looked an awful lot like the Higgs Boson would if it existed, but why?

Such a particle would be part of a mechanism called the ‘Higgs field’, which according to the Standard Model of particle physics gives other particles mass. It’s named for Professor Peter Higgs, who proposed its existence in 1964. It does so by breaking symmetry within the electroweak field of other particles, and thus giving substance to reality as we know it.

If your knowledge of quantum physics is more or less summed up by Schroedinger’s cat, breaking symmetry is the point at which the box is opened and cat is found alive or dead. Without the Higgs field, or something like it, larger particles and things, like us, wouldn’t form around the smaller ones and everything would be some sort of uncertain soup.

Higgs is an unstable particle which, if it exists, would decay to other smaller particles almost as soon as it is separated from the protons that are smashed together. As a result, data from the sensor sites is analysed for patterns that indicate the presence of particles a Higgs Boson might have decayed into – like two photons or Z and W bosons – rather than the particle itself.

[/vc_column_text] [/vc_tab] [vc_tab title=”The dictionary of CERN”] [vc_column_text width=”1/1″ el_position=”first last”]

CERN – European Organisation for Nuclear Research (orig. Conseil Européen pour la Recherche Nucléaire)

ATLAS – A Toroidal LHC Apparatus

CMS – Compact Muon Solenoid

TOTEM – Total Elastic and diffractive cross section Measurement

ALICE – A Large Ion Collider Experiment

LHCb – LHC beauty

MoEDAL – Monopole and exotics Detector At the LHC

LHCf – LHC Forward

WLCG – Worldwide LHC Computing Grid

LHC – Large Hadron Collider

[/vc_column_text] [/vc_tab] [vc_tab title=”Accelerator generator”] [vc_column_text width=”1/1″ el_position=”first last”]

The question of how much data is actually produced by LHC is a little misleading and hard to quantify with precision. In a manner uncannily similar to the particles being hunted, most of it vanishes within seconds of an event taking place.

Within the ATLAS team, one of Dr Salvatore’s key roles is as a member of the group which works on the ‘trigger’ software for the experiment.

“The experiment consists of two bunches of protons that collide every 25 nano seconds, and something comes out of this interaction,” says Salvatore, “You’ve got to decide whether or not this something is interesting or not. What we have is 40MHz of interactive, but we don’t have the capability to store 40MHz of data – what we do is store something in the order of 400Hz.”

The sampling rate doesn’t translate directly into byte sizes, but the principle that you’re trimming the initial data set by something in the order of a thousandth does.

There are three levels of software,” continues Salvatore, “And the first level has to work in the first few micro seconds, and by the third level which has to decide whether or not the whole event was interesting or not.

The ‘trigger’ does a very quick and crude analysis of the data in order to make sure that what goes into storage is interesting and not just another collision. What you don’t collect out of the 40MHz is lost.

Before we the experiment started, we ran a lot of Monte Carlo simulations to see what might happen during the events so that we could tweak the trigger and get as much as possible out of the data.”

[/vc_column_text] [/vc_tab] [vc_tab title=”Starting Grid”] [vc_column_text width=”1/1″ el_position=”first last”]

Ian Bird, Project Leader for CERN’s WLCG explains the origin of the grid.

“In the late 90s there were groups of people who got together and tried to understand what the scale of computing for LHC would be. There were some models put together in which we realised that there was no way CERN would be able to provide enough resources on its own to deal with the level of computing and data storage needed. There were a few models put together of distributed computing and how things would likely work.

“As those models were being developed – around 2000/2001 – there was this thing called ‘grid computing’ which was coming out of the computer science community which seemed like a good way of implementing the distributed computing model that we were coming up with.

“We embarked on several years of prototyping to see if we could make the computing do what we needed it to do. That grew in to the LCG project, which formally started in 2002, around the same time I arrived at CERN, and following that there were six or seven years of development and getting the technology to work.

“Running these systems at the scale we need to work at, you can’t afford to have failures because this costs a huge effort in people time. It has to be robust and it has to scale up and deal with all of the real world production level problems – it was really pushing the boundary of what the software at the time could do.

“We were essentially starting from scratch, and it really did take ten years. But we were using it ‘for real’ right from the beginning to run simulations and so on, which taught us an awful lot about how to make things reliable.

“I wouldn’t say we had trouble convincing funding bodies to back us, but I think they were wary of such a large project in computing which had never been done before. So we were subjected to the same kind of review processes that the experiments went through – which doesn’t usually happen in computing but certainly did with this project.”

[/vc_column_text] [/vc_tab] [/vc_tabs]