Often, an outage of a major internet firm is caused by nefarious sources, but on the odd occasion, user error is to blame.

Anybody using Cloudflare yesterday would have noted an outage leaving users unable to connect to the Cloudflare dashboard.

Was the outage caused by a DDoS attack? Was Cloudflare suffering at the hands of increased traffic due to COVID-19 and more users working from home?

Thankfully none of the above were the reason for the outage as Cloudlfare this morning revealed a technician had simply unplugged the incorrect plug.

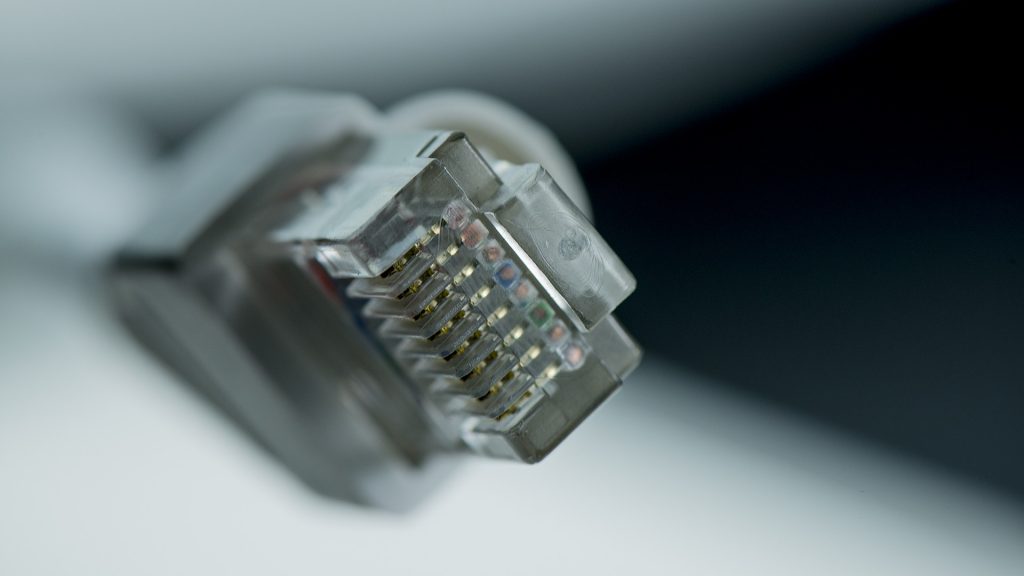

“As part of planned maintenance at one of our core data centers, we instructed technicians to remove all the equipment in one of our cabinets. That cabinet contained old inactive equipment we were going to retire and had no active traffic or data on any of the servers in the cabinet. The cabinet also contained a patch panel (switchboard of cables) providing all external connectivity to other Cloudflare data centers. Over the space of three minutes, the technician decommissioning our unused hardware also disconnected the cables in this patch panel,” explained chief technology officer at Cloudflare, John Graham-Cumming.

Following the great unplugging, Cloudflare lost connectivity and its Dashboard and API became unavailable as a result.

Now, what makes this story especially interesting is how Cloudflare managed to address this emergency given the current lockdown and social distancing measures taking place in many parts of the world.

The firm says it created two virtual war rooms for engineers. One room was for restoring connectivity while another was focused on disaster recovery failover.

“As we were working the incident, we made a decision every 20 minutes on whether to fail over the Dashboard and API to disaster recovery or to continue trying to restore connectivity. If there had been physical damage to the data center (e.g. if this had been a natural disaster) the decision to cut over would have been easy, but because we had run tests on the failover we knew that the failback from disaster recovery would be very complex and so we were weighing the best course of action as the incident unfolded,” explained Graham-Cumming.

The problem was first noted at 15:31 UTC on 15th April, by 19:44 UTC, the first link to the data centre came back up. By 20:31 UTC, fully-redundant connectivity was restored.

The Cloudflare CTO has said that the firm takes incidents such as this very seriously and several steps have been put in place to address this risk and prevent it from happening in the future, to the best of its ability of course.

The first of these measures is a rather important one that seems rather obvious in hindsight. That measure is changing the design of Cloudflare’s infrastructure.

While the firm uses multiple providers, all of those connections were going through one patch panel creating a single point of failure.

“This should be spread out across multiple parts of our facility,” said the Cloudflare CTO.

As an aside, YouTuber Tom Scott has a fantastic video about single points of failure we highly recommend watching.

Cloudflare has apologised for the disruption and has said it will fun a full post-mortem of the incident to address the root cause.

[Source – Cloudflare][Image – CC 0 Pixabay]